- 📝 Posted:

- 🚚 Summary of:

- P0252, P0253, P0254, P0255, P0256, P0257

- ⌨ Commits:

- (Seihou)

P0251...e98feef, (Seihou)e98feef...24df71c, (Seihou)24df71c...b7b863b, (Seihou)b7b863b...2b8218e, (Seihou)2b8218e...P0256, (Website)b79c667...e2ba49b - 💰 Funded by:

- Arandui, Ember2528, [Anonymous]

- 🏷 Tags:

And now we're taking this small indie game from the year 2000 and porting its game window, input, and sound to the industry-standard cross-platform API with "simple" in its name.

Why did this have to be so complicated?! I expected this to take maybe 1-2 weeks and result in an equally short blog post. Instead, it raised so many questions that I ended up with the longest blog post so far, by quite a wide margin. These pushes ended up covering so many aspects that could be interesting to a general and non-Seihou-adjacent audience, so I think we need a table of contents for this one:

- Evaluating Zig

- Visual Studio doesn't implement concepts correctly?

- Reusable building blocks for Tup

- Compiling SDL 2

- The new frame rate limiter

- Audio via SDL or SDL_mixer? (Nope, neither)

- miniaudio

- Resampling defective sound effects (including FLAC not always being lossless)

- Joypad input with SDL

- Restoring the original screenshot feature

- Integer math in hand-written ASM

Before we can start migrating to SDL, we of course have to integrate it into

the build somehow. On Linux, we'd ideally like to just dynamically link to a

distribution's SDL development package, but since there's no such thing on

Windows, we'd like to compile SDL from source there. This allows us to reuse

our debug and release flags and ensures that we get debug information,

without needing to clone build scripts for every

C++ library ever in the process or something.

So let's get my Tup build scripts ready for compiling vendored libraries… or

maybe not? Recently, I've kept hearing about a hot new

technology that not only provides the rare kind of jank-free

cross-compiling build system for C/C++ code, but innovates by even

bundling a C++ compiler into a single 279 MiB package with no

further dependencies. Realistically replacing both Visual Studio and Tup

with a single tool that could target every OS is quite a selling point. The

upcoming Linux port makes for the perfect occasion to evaluate Zig, and to

find out whether Tup is still my favorite build system in 2023.

Even apart from its main selling point, there's a lot to like about Zig:

- First and foremost: It's a modern systems programming language with seamless C interop that we could gradually migrate parts of the codebase to. The feature set of the core language seems to hit the sweet spot between C and C++, although I'd have to use it more to be completely sure.

- A native, optimized Hello World binary with no string formatting is 4 KiB when compiled for Windows, and 6.4 KiB when cross-compiled from Windows to Linux. It's so refreshing to see a systems language in 2023 that doesn't bundle a bulky runtime for trivial programs and then defends it with the old excuse of "but all this runtime code will come in handy the larger your program gets". With a first impression like this, Zig managed to realize the "don't pay for what you don't use" mantra that C++ typically claims for itself, but only pulls off maybe half of the time.

- You can directly

target specific CPU models, down to even the oldest 386 CPUs?! How

amazing is that?! In contrast, Visual Studio only describes its

/arch:IA32compatibility option in very vague terms, leaving it up to you to figure out that "legacy 32-bit x86 instruction set without any vector operations" actually means "i586/P5 Pentium, because the startup code still includes an unconditionalCPUIDinstruction". In any case, it means that Zig could also cover the i586 build.- Even better, changing Zig's CPU model setting recompiles both its

bundled C/C++ standard library and Zig's own compiler-rt polyfill

library for that architecture. This ensures that no unsupported

instructions ever show up in the binary, and also removes the need for

any

CPUIDchecks. This is so much better than the Visual Studio model of linking against a fixed pre-compiled standard library because you don't have to trust that all these newer instructions wouldn't actually be executed on older CPUs that don't have them.

- Even better, changing Zig's CPU model setting recompiles both its

bundled C/C++ standard library and Zig's own compiler-rt polyfill

library for that architecture. This ensures that no unsupported

instructions ever show up in the binary, and also removes the need for

any

- I love the auto-formatter. Want to lay out your struct literal into multiple lines? Just add a trailing comma to the end of the last element. It's very snappy, and a joy to use.

- Like every modern programming language, Zig comes with a test framework built into the language. While it's not all too important for my grand plan of having one big test that runs a bunch of replays and compares their game states against the original binary, small tests could still be useful for protecting gameplay code against accidental changes. It would be great if I didn't have to evaluate and choose among the many testing frameworks for C++ and could just use a language standard.

- Package management is still in its infancy, but it's looking pretty good so far, resembling Go's decentralized approach of just pointing to a URL but with specific version selection from the get-go.

However, as a version number of 0.11.0 might already suggest, the whole experience was then bogged down by quite a lot of issues:

- While Zig's C/C++ compilation feature is very well architected to reuse the C/C++ standard libraries of GCC and MinGW and thus automatically keeps up with changes to the C++ standard library, it's ultimately still just a Clang frontend. If you've been working with a Visual Studio-exclusive codebase – which, as we're going to see below, can easily happen even if you compile in C++23 mode – you'd now have to migrate to Clang and Zig in a single step. Obviously, this can't ever be fixed without Microsoft open-sourcing their C++ compiler. And even then, supporting a separate set of command-line flags might not be worth it.

- The standard library is very poorly documented, especially in the build-related parts that are meant to attract the C++ audience.

- Often, the only documentation is found in blog posts from a few years ago, with example code written against old Zig versions that doesn't compile on the newest version anymore. It's all very far from stable.

- However, Zig's project generation sub-commands (

zig init-exeand friends) do emit well-documented boilerplate code? It does make sense for that code to double as a comprehensive example, but Zig advertises itself as so simple that I didn't even think about bootstrapping my project with a CLI tool at first – unlike, say, Rust, where a project always starts with filling out a small form inCargo.toml. - There's no progress output for C/C++ compilation? Like, at all?

- This hurts especially because compilation times are significantly longer than they were with Visual Studio. By default, the current Tupfile builds Shuusou Gyoku in both debug and release configurations simultaneously. If I fully rebuild everything from a clean cache, Visual Studio finishes such a build in roughly the same amount of time that Zig takes to compile just a debug build.

- The

--global-cache-diroption is only supported by specific subcommands of thezigCLI rather than being a top-level setting, and throws an error if used for any other subcommand. Not having a system-wide way to change it and being forced into writing a wrapper script for that is fine, but it would be nice if said wrapper script didn't have to also parse and switch over the subcommand just to figure out whether it is allowed to append the setting. - compiler-rt still needs a bit of dead code elimination work. As soon as

your program needs a single polyfilled function, you get all of them,

because they get referenced in some exception-related table even if nothing

uses them? Changing the

link_eh_frame_hdroption had no effect. - And that was not the only

std.Build.Step.Compileoption that did nothing. Worse, if I just tweaked the options and changed nothing about the code itself, Zig simply copied a previously built executable out of its build cache into the output directory, as revealed by the timestamp on the .EXE. While I am willing to believe that Zig correctly detects that all these settings would just produce the same binary, I do not like how this behavior inspires distrust and uncertainty in Zig's build process as a whole. After all, we still live in a world where clearing the build cache is way too often the solution for weird problems in software, especially when using CMake. And it makes sense why it would be: If you develop a complex system and then try solving the infamously hard problem of cache invalidation on top, the risk of getting cache invalidation wrong is, by definition, higher than if that was the only thing your system did. That's the reason why I like Tup so much: It solely focuses on getting cache invalidation right, and rather errs on the side of caution by maybe unnecessarily rebuilding certain files every once in a while because the compiler may have read from an environment variable that has changed in the meantime. But this is the one job I expect a build system to do, and Tup has been delivering for years and has become fundamentally more trustworthy as a result. - Zig activates Clang's UBSan

in debug builds by default, which executes a program-crashing

UD2instruction whenever the program is about to rely on undefined C++ behavior. In theory, that's a great help for spotting hidden portability issues, but it's not helpful at all if these crashes are seemingly caused by C++ standard library code?! Without any clear info about the actual cause, this just turned into yet another annoyance on top of all the others. Especially because I apparently kept searching for the wrong terms when I first encountered this issue, and only found out how to deactivate it after I already decided against Zig. - Also, can we get

/PDBALTPATH? Baking absolute paths from the filesystem of the developer's machine into released binaries is not only cringe in itself, but can also cause potential privacy or security accidents.

So for the time being, I still prefer Tup. But give it maybe two or three years, and I'm sure that Zig will eventually become the best tool for resurrecting legacy C++ codebases. That is, if the proposed divorce of the core Zig compiler from LLVM isn't an indication that the productive parts of the Zig community consider the C/C++ building features to be "good enough", and are about to de-emphasize them to focus more strongly on the actual Zig language. Gaining adoption for your new systems language by bundling it with a C/C++ build system is such a great and unique strategy, and it almost worked in my case. And who knows, maybe Zig will already be good enough by the time I get to port PC-98 Touhou to modern systems.

(If you came from the Zig wiki, you can stop reading here.)

A few remnants of the Zig experiment still remain in the final delivery. If

that experiment worked out, I would have had to immediately change the

execution encoding to UTF-8, and decompile a few ASM functions exclusive to

the 8-bit rendering mode which we could have otherwise ignored. While Clang

does support inline assembly with Intel syntax via

-fms-extensions, it has trouble with ; comments

and instructions like REP STOSD, and if I have to touch that

code anyway… (The REP STOSD function translated into a single

call to memcpy(), by the way.)

Another smaller issue was Visual Studio's lack of standard library header hygiene, where #including some of the high-level STL features also includes more foundational headers that Clang requires to be included separately, but I've already known about that. Instead, the biggest shocker was that Visual Studio accepts invalid syntax for a language feature as recent as C++20 concepts:

// Defines the interface of a text rendering session class. To simplify this

// example, it only has a single `Print(const char* str)` method.

template <class T> concept Session = requires(T t, const char* str) {

t.Print(str);

};

// Once the rendering backend has started a new session, it passes the session

// object as a parameter to a user-defined function, which can then freely call

// any of the functions defined in the `Session` concept to render some text.

template <class F, class S> concept UserFunctionForSession = (

Session<S> && requires(F f, S& s) {

{ f(s) };

}

);

// The rendering backend defines a `Prerender()` method that takes the

// aforementioned user-defined function object. Unfortunately, C++ concepts

// don't work like this: The standard doesn't allow `auto` in the parameter

// list of a `requires` expression because it defines another implicit

// template parameter. Nevertheless, Visual Studio compiles this code without

// errors.

template <class T, class S> concept BackendAttempt = requires(

T t, UserFunctionForSession<S> auto func

) {

t.Prerender(func);

};

// A syntactically correct definition would use a different constraint term for

// the type of the user-defined function. But this effectively makes the

// resulting concept unusable for actual validation because you are forced to

// specify a type for `F`.

template <class T, class S, class F> concept SyntacticallyFixedBackend = (

UserFunctionForSession<F, S> && requires(T t, F func) {

t.Prerender(func);

}

);

// The solution: Defining a dummy structure that behaves like a lambda as an

// "archetype" for the user-defined function.

struct UserFunctionArchetype {

void operator ()(Session auto& s) {

}

};

// Now, the session type disappears from the template parameter list, which

// even allows the concrete session type to be private.

template <class T> concept CorrectBackend = requires(

T t, UserFunctionArchetype func

) {

t.Prerender(func);

};What's this, Visual Studio's infamous delayed template parsing applied to concepts, because they're templates as well? Didn't they get rid of that 6 years ago? You would think that we've moved beyond the age where compilers differed in their interpretation of the core language, and that opting into a current C++ standard turns off any remaining antiquated behaviors…

So let's actually get my Tup build scripts ready for compiling vendored libraries, because the 📝 previous 70 lines of Lua definitely weren't. For this use case, we'd like to have some notion of distinct build targets that can have a unique set of compilation and linking flags. We'd also like to always build them in debug and release versions even if you only intend to build your actual program in one of those versions – with the previous system of specifying a single version for all code, Tup would delete the other one, which forces a time-consuming and ultimately needless rebuild once you switch to the other version.

The solution I came up with treats the set of compiler command-line options

like a tree whose branches can concatenate new options and/or filter the

versions that are built on this branch. In total, this is my 4th

attempt at writing a compiler abstraction layer for Tup. Since we're

effectively forced to write such layers in Lua, it will always be a

bit janky, but I think I've finally arrived at a solid underlying design

that might also be interesting for others. Hence, I've split off the result

into its own separate

repository and added high-level documentation and a documented example.

And yes, that's a Code Nutrition

label! I've wanted to add one of these ever since I first heard about the

idea, since it communicates nicely how seriously such an open-source project

should be taken. Which, in this case, is actually not all too

seriously, especially since development of the core Tup project has all but

stagnated. If Zig does indeed get better and better at being a Clang

frontend/build system, the only niches left for Tup will be Visual

Studio-exclusive projects, or retrocoding with nonstandard toolchains (i.e.,

ReC98). Quite ironic, given Tup's Unix heritage…

Oh, and maybe general Makefile-like tasks where you just want to run

specific programs. Maybe once the general hype swings back around and people

start demanding proper graph-based dependency tracking instead of just a command runner…

Alright, alternatives evaluated, build system ready, time to include SDL!

Once again, I went for Git submodules, but this time they're held together

by a

batch file that ensures that the intended versions are checked out before

starting Tup. Git submodules have a bad rap mainly because of their

usability issues, and such a script should hopefully work around

them? Let's see how this plays out. If it ends up causing issues after all,

I'll just switch to a Zig-like model of downloading and unzipping a source

archive. Since Windows comes with curl and tar

these days, this can even work without any further dependencies, and will

also remove all the test code bloat.

Compiling SDL from a non-standard build system requires a

bit of globbing to include all the code that is being referenced, as

well as a few linker settings, but it's ultimately not much of a big deal.

I'm quite happy that it was possible at all without pre-configuring a build,

but hey, that's what maintaining a Visual Studio project file does to a

project. ![]()

By building SDL with the stock Windows configuration, we then end up with

exactly what the SDL developers want us to use… which is a DLL. You

can statically link SDL, but they really don't want you to do

that. So strongly, in fact, that they not

merely argue how well the textbook advantages of dynamic linking have worked

for them and gamers as a whole, but implemented a whole dynamic API

system that enforces overridable dynamic function loading even in static

builds. Nudging developers to their preferred solution by removing most

advantages from static linking by default… that's certainly a strategy. It

definitely fits with SDL's grassroots marketing, which is very good at

painting SDL as the industry standard and the only reliable way to keep your

game running on all originally supported operating systems. Well, at least

until SDL 3 is so stable that SDL 2 gets deprecated and won't

receive any code for new backends…

However, dynamic linking does make sense if you consider what SDL is.

Offering all those multiple rendering, input, and sound backends is what

sets it apart from its more hip competition, and you want to have all of

them available at any time so that SDL can dynamically select them based on

what works best on a system. As a result, everything in SDL is being

referenced somewhere, so there's no dead code for the linker to eliminate.

Linking SDL statically with link-time code generation just prolongs your

link time for no benefit, even without the dynamic API thwarting any chance

of SDL calls getting inlined.

There's one thing I still don't like about all this, though. The dynamic

API's table references force you to include all of SDL's subsystems in the

DLL even if your game doesn't need some of them. But it does fit with their

intention of having SDL2.dll be swappable: If an older game

stopped working because of an outdated SDL2.dll, it should be

possible for anyone to get that game working again by replacing that DLL

with any newer version that was bundled with any random newer game. And

since that would fail if the newer SDL2.dll was size-optimized

to not include some of the subsystems that the older game required, they

simply removed (or

) the possibility altogether.

Maybe that was their train of thought? You can always just use the official Windows

DLL, whose whole point is to include everything, after all. 🤷

de-prioritized

So, what do we get in these 1.5 MiB? There are:

- renderer backends for Direct3D 9/11/12, regular OpenGL, OpenGL ES 2.0, Vulkan, and a software renderer,

- input backends for DirectInput, XInput, Raw Input, and all the official game console controllers that can be connected via USB,

- and audio backends for WinMM, DirectSound, WASAPI, and direct-to-disk recording.

Unfortunately, SDL 2 also statically references some newer Windows API functions and therefore doesn't run on Windows 98. Since this build of Shuusou Gyoku doesn't introduce any new features to the input or sound interfaces, we can still use pbg's original DirectSound and DirectInput code for the i586 build to keep it working with the rest of the platform-independent game logic code, but it will start to lag behind in features as soon as we add support for SC-88Pro BGM or more sophisticated input remapping. If we do want to keep this build at the same feature level as the SDL one, we now have a choice: Do we write new DirectInput and DirectSound code and get it done quickly but only for Shuusou Gyoku, or do we port SDL 2 to Windows 98 and benefit all other SDL 2 games as well? I leave that for my backers to decide.

Immediately after writing the first bits of actual SDL code to initialize

the library and create the game window, you notice that SDL makes it very

simple to gradually migrate a game. After creating the game window, you can

call SDL_GetWindowWMInfo()

to retrieve HWND and HINSTANCE handles that allow

you to continue using your original DirectDraw, DirectSound, and DirectInput

code and focus on porting one subsystem at a time.

Sadly, D3DWindower can no longer turn SDL's fullscreen mode into a windowed

one, but DxWnd still works, albeit behaving a bit janky and insisting on

minimizing the game whenever its window loses focus. But in exchange, the

game window can surprisingly be moved now! Turns out that the originally

fixed window position had nothing to do with the way the game created its

DirectDraw context, and everything to do with pbg

blocking the Win32 "syscommand" that allows a window to be moved. By

deleting a system menu… seriously?! Now I'm dying to hear the Raymond

Chen explanation for how this behavior dates back to an unfortunate decision

during the Win16 days or something.

As implied by that commit, I immediately backported window movability to the

i586 build.

However, the most important part of Shuusou Gyoku's main loop is its frame

rate limiter, whose Win32 version leaves a bit of room for improvement.

Outside of the uncapped [おまけ] DrawMode, the

original main loop continuously checks whether at least 16 milliseconds have

elapsed since the last simulated (but not necessarily rendered) frame. And

by that I mean continuously, and deliberately without using any of

the Windows system facilities to sleep the process in the meantime, as

evidenced by a commented-out Sleep(1) call. This has two

important effects on the game:

- The

60Fps DrawModeactually corresponds to a frame rate of(1000 / 16) =62.5 FPS, not 60. Since the game didn't account for the missing 2/3 ms to bring the limit down to exactly 60 FPS, 62.5 FPS is Shuusou Gyoku's actual official frame rate in a non-VSynced setting, which we should also maintain in the SDL port. - Not sleeping the process turns Shuusou Gyoku's frame rate limitation

into a busy-waiting loop, which always uses 100% of a single CPU core just

to wait for the next frame.

Unsurprisingly, SDL features a delay function that properly sleeps the process for a given number of milliseconds. But just specifying 16 here is not exactly what we want:

- Sure, modern computers are fast, but a frame won't ever take an infinitely fast 0 milliseconds to render. So we still need to take the current frame time into account.

SDL_Delay()'s documentation says that the wake-up could be further delayed due to OS scheduling.

To address both of these issues, I went with a base delay time of 15 ms minus the time spent on the current frame, followed by busy-waiting for the last millisecond to make sure that the next frame starts on the exact frame boundary. And lo and behold: Even though this still technically wastes up to 1 ms of CPU time, it still dropped CPU usage into the 0%-2% range during gameplay on my Intel Core i5-8400T CPU, which is over 5 years old at this point. Your laptop battery will appreciate this new build quite a bit.

Time to look at audio then, because it sure looks less complicated than

input, doesn't it? Loading sounds from .WAV file buffers, playing a fixed

number of instances of every sound at a given position within the stereo

field and with optional looping… and that's everything already. The

DirectSound implementation is so straightforward that the most complex part

of its code is the .WAV file parser.

Well, the big problem with audio is actually finding a cross-platform

backend that implements these features in a way that seamlessly works with

Shuusou Gyoku's original files. DirectSound really is the perfect sound API

for this game:

- It doesn't require the game code to specify any output sample format. Just load the individual sound effects in their original format, and playback just works and sounds correctly.

- Its final sound stream seems to have a latency of 10 ms, which is perfectly fine for a game running at 62.5 FPS. Even 15 ms would be OK.

- Sound effect looping? Specified by passing the

DSBPLAY_LOOPINGflag toIDirectSoundBuffer::Play(). - Stereo

panningbalancing? One method call. - Playing the same sound multiple times simultaneously from a single memory buffer? One method call. (It can fail though, requiring you to copy the data after all.)

- Pausing all sounds while the game window is not focused? That's the default behavior, but it can be equally easily disabled with just a single per-buffer flag.

- Future streaming of waveform BGM? No problem either. Windows Touhou has always done that, and here's some code I wrote 12½ years ago that would even work without DirectSound 8's notification feature.

- No further binary bloat, because it's part of the operating system.

The last point can't really be an argument against anything, but we'd still be left with 7 other boxes that a cross-platform alternative would have to tick. We already picked SDL for our portability needs, so how does its audio subsystem stack up? Unfortunately, not great:

- It's fully DIY. All you get is a single output buffer, and you have to do all the mixing and effect processing yourself. In other words, it's the masochistic approach to cross-platform audio.

- There are helper functions for resampling and mixing, but the documentation of the latter is full of FUD. With a disclaimer that so vehemently discourages the use of this function, what are you supposed to do if you're newly integrating SDL audio into a game? Hunt for a separate sound mixing library, even though your only quality goal is parity with stone-age DirectSound? 🙄

- It forces the game to explicitly define the PCM sampling rate, bit

depth, and channel count of the output buffer. You can't

just pass a

nullptrtoSDL_OpenAudioDevice(), and if you pass a zeroedSDL_AudioSpecstructure, SDL just defaults to an unacceptable 22,050 Hz sampling rate, regardless of what the audio device would actually prefer. It took until last year for them to notice that people would at least like to query the native format. But of course, this approach requires the backend to actually provide this information – and since we've seen above that DirectSound doesn't care, the DirectSound version of this function has to actually use the more modern WASAPI, and remains unimplemented if that API is not available.

Standardizing the game on a single sampling rate, bit depth, and channel count might be a decent choice for games that consistently use a single format for all its sounds anyway. In that case, you get to do all mixing and processing in that format, and the audio backend will at most do one final conversion into the playback device's native format. But in Shuusou Gyoku, most sound effects use 22,050 Hz, the boss explosion sound effect uses 11,025 Hz, and the future SC-88Pro BGM will obviously use 44,100 Hz. In such a scenario, you would have to pick the highest sampling rate among all sound sources, and resample any lower-quality sounds to that rate. But if the audio device uses a different sampling rate, those lower-quality sounds would get resampled a second time.

I know that this will be fixed in SDL 3, but that version is still under heavy development. - Positives? Uh… the callback-based nature means that BGM streaming is rather trivial, and would even be comparatively less complicated than with DirectSound. Having a mutex to prevent writes to your sound instance structures while they're being read by the audio thread is nice too.

OK, sure, but you're not supposed to use it for anything more than a single stream of audio. SDL_mixer exists precisely to cover such non-trivial use cases, and it even supports sound effect looping and panning with just a single function call! But as far as the rest of the library is concerned, it manages to be an even bigger disappointment than raw SDL audio:

- As it sits on top of SDL's audio subsystem, it still can't just use your audio device's native sample format.

- Even worse, it insists on initializing the audio device itself, and thus always needs to duplicate whatever you would do for raw SDL.

- It only offers a very opinionated system for streaming – and of course, its opinion is wrong. 😛 The fact that it only supports a single streaming audio track wouldn't matter all too much if you could switch to another track at sample precision. But since you can't, you're forced to implement looping BGM using a single file…

- …which brings us to the unfortunate issue of loop point definitions.

And, perhaps most importantly, the complete lack of any way to set them

through the API?! It doesn't take long until you come up with a theory for

why the API only offers a function to retrieve loop points: The

"music" abstraction is so format-agnostic that it even supports MIDI

and tracker formats where a typical loop point in PCM samples doesn't make

sense. Both of these formats already have in-band ways of specifying loop

points in their respective time units. They

might not be standardized, but it's still much better than usual

single-file solutions for PCM streams where the loop point has to be stored

in an out-of-band way – such as in a metadata tag or an entirely separate

file.

- Speaking of MIDI, why is it so common among these APIs to not have any way of specifying the MIDI device? The fact that Windows Vista removed the Control Panel option for specifying the system-wide default MIDI output device is no excuse for your API lacking the option as well. In fact, your MIDI API now needs such a setting more than it was needed in the Windows XP and 9x days.

- Actually, wait, the API does have a function that is exclusive to tracker formats. Which means that they aren't actually insisting on a clean, consistent, and minimal API here… 🤔

- Funnily enough, they did once receive a patch for a function to set loop points which was never upstreamed… and this patch came from the main developer behind PyTouhou, who needed that feature for obvious reasons. The world sure is a small place.

- As a result, they turned loop points into a property that each

individual format may

or may

not have. Want to loop

MP3 files at sample precision? Tough luck, time to reconvert to another

lossy format. 🙄 This is the exact jank I decided against when I implemented

BGM modding for thcrap back in 2018,

where I concluded that separate intro and

loop files are the way to go.

But OK, we only plan to use FLAC and Ogg Vorbis for the SC-88Pro BGM, for which SDL_mixer does support loop points in the form of Vorbis comments, and hey, we can even pass them at sample accuracy. Sure, it's wrong and everything, but nothing I couldn't work with… - However, the final straw that makes SDL_mixer unsuitable for Shuusou

Gyoku is its core sound mixing paradigm of distributing all sound effects

onto a fixed number of channels, set to 8

by default. Which raises the quite ridiculous question of how many we

would actually need to cover the maximum amount of sounds that can

simultaneously be played back in any game situation. The theoretic maximum

would be 41, which is the combined sum of individual sound buffer instances

of all 20 original sound effects. The practical limit would surely be a lot

smaller, but we could only find out that one through experiments, which

honestly is quite a silly proposition.

- It makes you wonder why they went with this paradigm in the first

place. And sure enough, they actually

use the aforementioned SDL core function for mixing audio. Yes, the

same function whose current documentation advises against using it for

this exact use case. 🙄 What's the argument here? "Sure, 8 is

significantly more than 2, but any mixing artifacts that will occur for

the next 6 sounds are not worrying about, but they get really bad

after the 8th sound, so we're just going to protect you from

that"?

- It makes you wonder why they went with this paradigm in the first

place. And sure enough, they actually

use the aforementioned SDL core function for mixing audio. Yes, the

same function whose current documentation advises against using it for

this exact use case. 🙄 What's the argument here? "Sure, 8 is

significantly more than 2, but any mixing artifacts that will occur for

the next 6 sounds are not worrying about, but they get really bad

after the 8th sound, so we're just going to protect you from

that"?

There is a fork that

does add support for an arbitrary number of music streams, but the rest of

its features leave me questioning the priorities and focus of this project.

Because surely, when I think about missing features in an audio backend, I

immediately think about support

for a vast array of chiptune file formats… 🤪

And wait,

what, they

merged this piece of bloat back into the official SDL_mixer library?!

Thanks for opening

up a vast attack surface for potential security vulnerabilities in code

that would never run for the majority of users, just to cover some niche

formats that nobody would seriously expect in a general audio library. And

that's coming from someone who loves listening to that stuff!

At this rate, I'm expecting SDL_mixer to gain a mail

client by the end of the decade. Hmm, what's the closest audio thing to

a mail client… oh, right, WebRTC! Yeah, let's just casually drop

a giant part of the Chromium codebase into SDL_mixer, what could

possibly go wrong?

raylib is another one of those libraries and has been getting exceptionally popular in recent years, to the point of even having more than twice as many GitHub stars as SDL. By restricting itself to OpenGL, it can even offer an abstraction for shaders, which we'd really like for the 西方Project lens ball effect.

In the case of raylib's audio system, the lack of sound effect looping is the minute API detail that would make it annoying to use for Shuusou Gyoku. But it might be worth a look at how raylib implements all this if it doesn't use SDL… which turned out to be the best look I've taken in a long time, because raylib builds on top of miniaudio which is exactly the kind of audio library I was hoping to find. Let's check the list from above:

- 🟢 miniaudio's high-level API initialization defaults to the native sample format of the playback device. Its internal processing uses 32-bit floating-point samples and only converts back to the native bit depth as necessary when writing the final stream into the backend's audio buffer. WASAPI, for example, never needs any further conversion because it operates with 32-bit floats as well.

- 🟢 The final audio stream uses the same 10 ms update period (and thus, sound effect latency) that I was getting with DirectSound.

- 🟢 Stereo

panningbalancing?ma_sound_set_pan(), although it does require a conversion from Shuusou Gyoku's dB units into a linear attenuation factor. - 🟢 Sound effect looping?

ma_sound_set_looping(). - 🟢 Playing the same sound multiple times simultaneously from a single memory buffer? Perfectly possible, but requires a bit of digging in the header to find the best solution. More on that below.

- 🟢 Future streaming of waveform BGM? Just call

ma_sound_init_from_file()with theMA_SOUND_FLAG_STREAMflag.- 👍 It also comes with a FLAC decoder in the core library and an Ogg Vorbis one as part of the repo, …

- 🤩 … and even supports gapless switching between the intro and loop

files via a single declarative call to

ma_data_source_set_next()!

(Oh, and it also hasma_data_set_loop_point_in_pcm_frames()for anyone who still believes in obviously and objectively inferior out-of-band loop points.)

- 🟢 Pausing all sounds while the game window is not focused? It's not

automatic, but adding new functions to the sound interface and calling

ma_engine_stop()andma_engine_start()does the trick, and most importantly doesn't cause any samples to be lost in the process. - 🟡 Sound control is implemented in a lock-free way, allowing your main game thread to call these at any time without causing glitches on the audio thread. While that looks nice and optimal on the surface, you now have to either believe in the soundness (ha) of the implementation, or verify that atomic structure fields actually are enough to not cause any race conditions (which I did for the calls that Shuusou Gyoku uses, and I didn't find any). "It's all lock-free, don't worry about it" might be easier, but I consider SDL's approach of just providing a mutex to prevent the output callback from running while you mutate the sound state to actually be simpler conceptually.

- 🟡 miniaudio adds 247 KB to the binary in its minimum configuration, a bit more than expected. Some of that is bloat from effect code that we never use, but it does include backends for all three Windows audio subsystems (WASAPI, DirectSound, and WinMM).

- ✅ But perhaps most importantly: It natively supports all modern operating systems that one could seriously want to port this game to, and could be easily ported to any other backend, including SDL.

Oh, and it's written by the same developer who also wrote the best FLAC library back in 2018. And that's despite them being single-file C libraries, which I consider to be massively overrated…

The drawback? Similar to Zig, it's only on version 0.11.18, and also focuses

on good high-level documentation at the expense of an API reference. Unlike

Zig though, the three issues I ran into turned out to be actual and fixable

bugs: Two minor

ones related to looping of streamed sounds shorter than 2 seconds which

won't ever actually affect us before we get into BGM modding, and a critical one that

added high-frequency corruption to any mono sound effect during its

expansion to stereo. The latter took days to track down – with symptoms

like these, you'd immediately suspect the bug to lie in the resampler or its

low-pass filter, both of which are so much more of a fickle and configurable

part of the conversion chain here. Compared to that, stereo expansion is so

conceptually simple that you wouldn't imagine anyone getting it wrong.

While the latter PR has been merged, the fix is still only part of the

dev branch and hasn't been properly released yet. Fortunately,

raylib is not affected by this bug: It does currently

ship version 0.11.16 of miniaudio, but its usage of the library predates

miniaudio's high-level API and it therefore uses a different,

non-SSE-optimized code path for its format conversions.

The only slightly tricky part of implementing a miniaudio backend for

Shuusou Gyoku lies in setting up multiple simultaneously playing instances

for each individual sound. The documentation and answers on the issue

tracker heavily push you toward miniaudio's resource manager and its file

abstractions to handle this use case. We surely could turn Shuusou Gyoku's

numeric sound effect IDs into fake file names, but it doesn't really fit the

existing architecture where the sound interface just receives in-memory .WAV

file buffers loaded from the SOUND.DAT packfile.

In that case, this seems to be the best way:

- Call

ma_decode_memory()to decode from any of the supported audio formats to a buffer of raw PCM samples.

At this point, you can choose between- decoding into the original format the sound effect is stored in, which would require it to be converted to the playback format every time it's played, or

- decoding into 32-bit floats (the native bit depth of the miniaudio engine) and the native sampling rate of the playback device, which avoids any further resampling and floating-point conversion, but takes up more memory.

I went with 2) mainly because it simplified all the debugging I was doing. At a sampling rate of 48,000 Hz, this increases the memory usage for all sound effects from 379 KiB to 3.67 MiB. At least I'm not channel-expanding all sound effects as well here… We've seen earlier that mono➜stereo expansion

is SSE-optimized, so it's very hard to justify a further doubling of the

memory usage here.

We've seen earlier that mono➜stereo expansion

is SSE-optimized, so it's very hard to justify a further doubling of the

memory usage here. - Then, for each instance of the sound, call

ma_audio_buffer_ref_init()to create a reference buffer with its own playback cursor, andma_sound_init_from_data_source()to create a new high-level sound node that will play back the reference buffer.

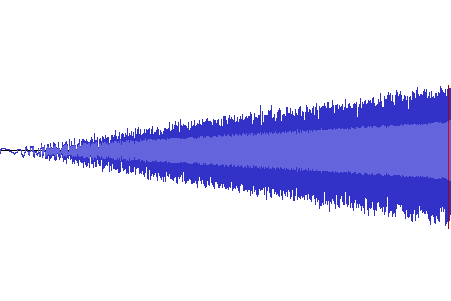

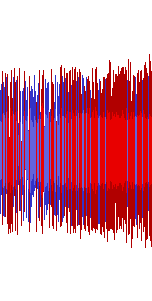

As a side effect of hunting that one critical bug in miniaudio, I've now learned a fair bit about audio resampling in general. You'll probably need some knowledge about basic digital signal behavior to follow this section, and that video is still probably the best introduction to the topic.

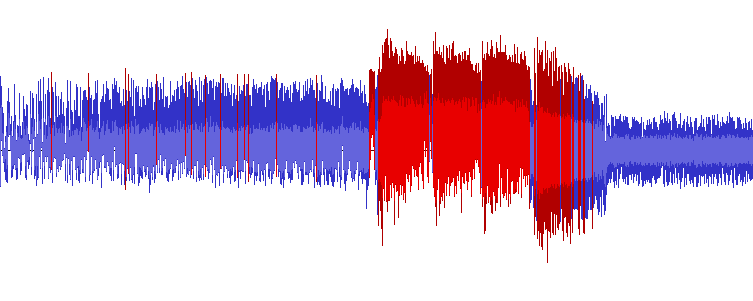

So, how could this ever be an issue? The only time I ever consciously thought about resampling used to be in the context of the Opus codec and its enforced sampling rate of 48,000 Hz, and how Opus advocates claim that resampling is a solved problem and nothing to worry about, especially in the context of a lossy codec. Still, I didn't add Opus to thcrap's BGM modding feature entirely because the mere thought of having to downsample to 44,100 Hz in the decoder was off-putting enough. But even if my worries were unfounded in that specific case: Recording the Stereo Mix of Shuusou Gyoku's now two audio backends revealed that apparently not every audio processing chain features an Opus-quality resampler…

If we take a look at the material that resamplers actually have to work with

here, it quickly becomes obvious why their results are so varied. As

mentioned above, Shuusou Gyoku's sound effects use rather low sampling rates

that are pretty far away from the 48,000 Hz your audio device is most

definitely outputting. Therefore, any potential imaging noise across the

extended high-frequency range – i.e., from the original Nyquist frequencies

of 11,025 Hz/5,512.5 Hz up to the new limit of 24,000 Hz – is

still within the audible range of most humans and can clearly color the

resulting sound.

But it gets worse if the audio data you put into the resampler is

objectively defective to begin with, which is exactly the problem we're

facing with over half of Shuusou Gyoku's sound effects. Encoding them all as

8-bit PCM is definitely excusable because it was the turn of the millennium

and the resulting noise floor is masked by the BGM anyway, but the blatant

clipping and DC offsets definitely aren't:

Wait a moment, true peaks? Where do those come from? And, equally importantly, how can we even observe, measure, and store anything above the maximum amplitude of a digital signal?

The answer to the first question can be directly derived from the Xiph.org video I linked above: Digital signals are lollipop graphs, not stairsteps as commonly depicted in audio editing software. Converting them back to an analog signal involves constructing a continuous curve that passes through each sample point, and whose frequency components stay below the Nyquist frequency. And if the amplitude of that reconstructed wave changes too strongly and too rapidly, the resulting curve can easily overshoot the maximum digital amplitude of 0 dBFS even if none of the defined samples are above that limit.

But I can assure you that I did not create the waveform images above by

recording the analog output of some speakers or headphones and then matching

the levels to the original files, so how did I end up with that image? It's

not an Audacity feature either because the development team argues

that there is no "true waveform" to be visualized as every DAC behaves

differently. While this is correct in theory, we'd be happy just

to get a rough approximation here.

ffmpeg's ebur128 filter has a parameter to measure the true

peak of a waveform and fairly understandable source code, and once I looked

at it, all the pieces suddenly started to make sense: For our purpose of

only looking at digital signals, 💡 resampling to a floating-point signal

with an infinite sampling rate is equivalent to a DAC. And that's

exactly what this filter does: It picks

192,000 Hz and 64-bit float as a format that's close enough to the

ideal of "analog infinity" for all

practical purposes that involve digital audio, and then simply converts

each incoming 100 ms of audio and keeps

the sample with the largest floating-point value.

So let's store the resampled output as a FLAC file and load it into Audacity

to visualize the clipped peaks… only to find all of them replaced with the

typical kind of clipping distortion? 😕 Turns out that I've stumbled over

the one case where the FLAC format isn't lossless and there's

actually no alternative to .WAV: FLAC just doesn't support

floating-point samples and simply truncates them to discrete integers during

encoding. When we measured inter-sample peaks above, we weren't only

resampling to a floating-point format to avoid any quantization to discrete

integer values, but also to make it possible to store amplitudes beyond the

0 dBFS point of ±1.0 in the first place. Once we lose that ability,

these amplitudes are clipped to the maximum value of the integer bit depth,

and baked into the waveform with no way to get rid of them again. After all,

the resampled file now uses a higher sampling rate, and the clipping

distortion is now a defined part of what the sound is.

Finally, storing a digital signal with inter-sample peaks in a

floating-point format also makes it possible for you to reduce the

volume, which moves these peaks back into the regular, unclipped amplitude

range. This is especially relevant for Shuusou Gyoku as you'll probably

never listen to sound effects at full volume.

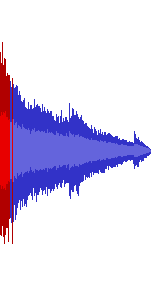

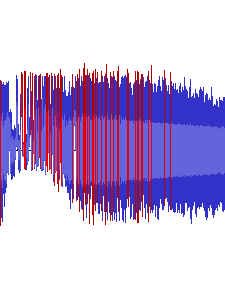

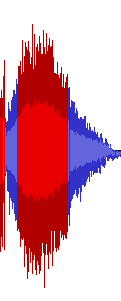

Now that we understand what's going on there, we can finally compare the output of various resamplers and pick a suitable one to use with miniaudio. And immediately, we see how they fall into two categories:

- High-quality resamplers are the ones I described earlier: They cleanly recreate the signal at a higher sampling rate from its raw frequency representation and thus add no high-frequency noise, but can lead to inter-sample peaks above 0 dBFS.

- Linear resamplers use much simpler math to merely interpolate between neighboring samples. Since the newly interpolated samples can only ever stay within 0 dBFS, this approach fully avoids inter-sample clipping, but at the expense of adding high-frequency imaging noise that has to then be removed using a low-pass filter.

miniaudio only comes with a linear resampler – but so does DirectSound as it turns out, so we can get actually pretty close to how the game sounded originally:

As mentioned above, you'll only get this sound out of your DAC at lower volumes where all of the resampled peaks still fit within 0 dBFS. But you most likely will have reduced your volume anyway, because these effects would be ear-splittingly loud otherwise.

±1.0f to the discrete

-32,678 32,767, the maximum value of such

an integer. The resulting straight lines at maximum amplitude in the

time domain then turn into distortion across the entire 24,000 Hz

frequency domain, which then remains a part of the waveform even at

lower volumes. The locations of the high-frequency noise exactly match

the clipped locations in the time-domain waveform images above.The resulting additional distortion can be best heard in

BOSSBOMB, where the low source frequency ensures that any

distortion stays firmly within the hearing range of most humans.

These spectrum images were initially created using ffmpeg's

-lavfi

showspectrumpic=mode=combined:s=1280x720 filter. The samples

appear in the same order as in the waveform above.

And yes, these are indeed the first videos on this blog to have sound! I spent another push on preparing the 📝 video conversion pipeline for audio support, and on adding the highly important volume control to the player. Web video codecs only support lossy audio, so the sound in these videos will not exactly match the spectrum image, but the lossless source files do contain the original audio as uncompressed PCM streams.

Compared to that whole mess of signals and noise, keyboard and joypad input is indeed much simpler. Thanks to SDL, it's almost trivial, and only slightly complicated because SDL offers two subsystems with seemingly identical APIs:

- SDL_Joystick simply numbers all axes and buttons of a joypad and doesn't assign any meaning to these numbers, but works with every joypad ever.

- SDL_GameController provides a consistent interface for the typical kind

of modern gamepad with two analog sticks, a D-pad, and at least 4 face and 2

shoulder buttons. This API is implemented by simply combining SDL_Joystick

with a

long list of mappings for specific controllers, and therefore doesn't

work with joypads that don't match this standard.

According to SDL, this is what a "game controller" looks like. Here's the source of the SVG.

To match Shuusou Gyoku's original WinMM backend, we'd ideally want to keep

the best aspects from both APIs but without being restricted to

SDL_GameController's idea of a controller. The Joy

Pad menu just identifies each button with a numeric ID, so

SDL_Joystick would be a natural fit. But what do we do about directional

controls if SDL_Joystick doesn't tell us which joypad axes correspond to the

X and Y directions, and we don't have the SDL-recommended configuration UI yet?

Doing that right would also mean supporting

POV hats and D-pads, after all… Luckily, all joypads we've tested map

their main X axis to ID 0 and their main Y axis to ID 1, so this seems like

a reasonable default guess.

Fortunately, there

is a solution for our exact issue. We can still try to open a

joypad via SDL_GameController, and if that succeeds, we can use a function

to retrieve the SDL_Joystick ID for the main X and Y axis, close the

SDL_GameController instance, and keep using SDL_Joystick for the rest of the

game.

And with that, the SDL build no longer needs DirectInput 7, certain antivirus scanners will no longer complain about

its low-level keyboard hook, and I turned the original game's

single-joypad hot-plugging into multi-joypad hot-plugging with barely any

code. 🎮

The necessary consolidation of the game's original input handling uncovered

several minor bugs around the High Score and Game Over screen that I

sufficiently described in the release notes of the new build. But it also

revealed an interesting detail about the Joy Pad

screen: Did you know that Shuusou Gyoku lets you unbind all these

actions by pressing more than one joypad button at the same time? The

original game indicated unbound actions with a [Button

0] label, which is pretty confusing if you have ever programmed

anything because you now no longer know whether the game starts numbering

buttons at 0 or 1. This is now communicated much more clearly.

![Joypad button unbinding in the original version of Shuusou Gyoku, indicated by a rather confusing [Button 0] label](/blog/static/2023-09-30-SH01-Joypad-unmapping-original.png?9fe43b80)

![Joypad button unbinding in the P0256 build of Shuusou Gyoku, using a much clearer [--------] label](/blog/static/2023-09-30-SH01-Joypad-unmapping-P0256.png?f7adebb7)

ESC is not bound to any joypad button in

either screenshot, but it's only really obvious in the P0256

build.

With that, we're finally feature-complete as far as this delivery is

concerned! Let's send a build over to the backers as a quick sanity check…

a~nd they quickly found a bug when running on Linux and Wine. When holding a

button, the game randomly stops registering directional inputs for a short

while on some joypads? Sounds very much like a Wine bug, especially if the

same pad works without issues on Windows.

And indeed, on certain joypads, Wine maps the buttons to completely

different and disconnected IDs, as if it simply invents new buttons or axes

to fill the resulting gaps. Until we can differentiate joypad bindings

per controller, it's therefore unlikely that you can use the same joypad

mapping on both Windows and Linux/Wine without entering the Joy Pad menu and remapping the buttons every time you

switch operating systems.

Still, by itself, this shouldn't cause any issues with my SDL event handling

code… except, of course, if I forget a break; in a switch case.

🫠

This completely preventable implicit fallthrough has now caused a few hours

of debugging on my end. I'd better crank up the warning level to keep this

from ever happening again. Opting into this specific warning also revealed

why we haven't been getting it so far: Visual Studio did gain a whole host

of new warnings related to the C++ Core

Guidelines a while ago, including the one I

was looking for, but actually getting the compiler to throw these

requires activating

a separate static analysis mode together with a plugin, which

significantly slows down build times. Therefore I only activate them for

release builds, since these already take long enough. ![]()

But that wasn't the only step I took as a result of this blunder. In addition, I now offer free fixes for regressions in my mod releases if anyone else reports an issue before I find it myself. I've already been following this policy 📝 earlier this year when mu021 reported the unblitting bug in the initial release of the TH01 Anniversary Edition, and merely made it official now. If I was the one who broke a thing, I'll fix it for free.

Since all that input debugging already started a 5th push, I

might as well fill that one by restoring the original screenshot feature.

After all, it's triggered by a key press (and is thus related to the input

backend), reads the contents of the frame buffer (and is thus related to the

graphics backend), and it honestly looks bad to have this disclaimer in the

release notes just because we're one small feature away from 100% parity

with pbg's original binary.

Coincidentally, I had already written code to save a DirectDraw surface to a

.BMP file for all the debugging I did in the last delivery, so we were

basically only missing filename generation. Except that Shuusou

Gyoku's original choice of mapping screenshots to the PrintScreen key did

not age all too well:

- As of Windows XP's 64-bit version, you can no longer use standard window messages to detect that this key is being pressed.

- And as of Windows 11, the OS takes full control of the key by binding it to the Snipping Tool by default, complete with a UI that politely steals focus when hitting that key.

As a result, both Arandui and I independently arrived at the idea of remapping screenshots to the P key, which is the same screenshot key used by every Windows Touhou game since TH08.

The rest of the feature remains unchanged from how it was in pbg's original build and will save every distinct frame rendered by the game (i.e., before flipping the two framebuffers) to a .BMP file as long as the P key is being held. At a 32-bit color depth, these screenshots take up 1.2 MB per frame, which will quickly add up – especially since you'll probably hold the P key for more than 1/60 of a second and therefore end up saving multiple frames in a row. We should probably compress them one day.

Since I already translated some of Shuusou Gyoku's ASM code to C++ during

the Zig experiment, it made sense to finish the fifth push by covering the

rest of those functions. The integer math functions are used all throughout

the game logic, and are the main reason why this goal is important for a

Linux port, or any port to a 64-bit architecture for that matter. If you've

ever read a micro-optimization-related blog post, you'll know that hand-written ASM is a great recipe that often results in the finest jank, and the game's square root function definitely delivers in that regard, right out of the gate.

What slightly differentiates this algorithm from the typical definition of

an integer

square root is that it rounds up: In real numbers, √3 is

≈ 1.73, so isqrt(3) returns 2 instead of 1. However, if

the result is always rounded down, you can determine whether you have to

round up by simply squaring the calculated root and comparing it to the radicand. And even that

is only necessary if the difference between the two doesn't naturally fall

out of the algorithm – which is what also happens with Shuusou Gyoku's

original ASM code, but pbg

didn't realize this and squared the result regardless. ![]()

That's one suboptimal detail already. Let's call the original ASM function

in a loop over the entire supported range of radicands from 0 to

231 and produce a list of results that I can verify my C++

translation against… and watch as the function's linear time complexity with

regard to the radicand causes the loop to run for over 15 hours on my

system. 🐌 In a way, I've found the literal opposite of Q_rsqrt()

here: Not fast, not inverse, no bit hacks, and surely without the

awe-inspiring kind of WTF.

I really didn't want to run the same loop over a

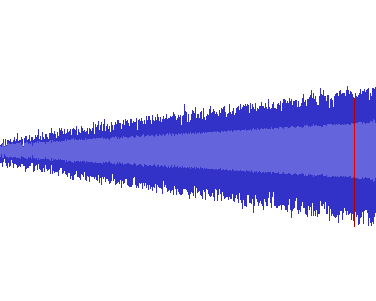

literal C++ translation of the same algorithm afterward. Calculating

integer square roots is a common problem with lots of solutions, so let's

see if we can go better than linear.

And indeed, Wikipedia also has a bitwise algorithm that runs in logarithmic time, uses only additions, subtractions, and bit shifts, and even ends up with an error term that we can use to round up the result as necessary, without a multiplication. And this algorithm delivers the exact same results over the exact same range in… 50 seconds. 🏎️ And that's with the I/O to print the first value that returns each of the 46,341 different square root results.

"But wait a moment!", I hear you say. "Why are you bothering with

an integer square root algorithm to begin with? Shouldn't good old

round(sqrt(x)) from <math.h> do the trick

just fine? Our CPUs have had SSE for a long time, and this probably compiles

into the single SQRTSD instruction. All that extra

floating-point hardware might mean that this instruction could even run in

parallel with non-SSE code!"

And yes, all of that is technically true. So I tested it, and my very

synthetic and constructed micro-benchmark did indeed deliver the same

results in… 48 seconds. ![]() That's not enough of a

difference to justify breaking the spirit of treating the FPU as lava that

permeates Shuusou Gyoku's code base. Besides, it's not used for that much to

begin with:

That's not enough of a

difference to justify breaking the spirit of treating the FPU as lava that

permeates Shuusou Gyoku's code base. Besides, it's not used for that much to

begin with:

- pre-calculating the 西方Project lens ball effect

- the fade animation when entering and leaving stages

- rendering the circular part of stationary lasers

- pulling items to the player when bombing

After a quick C++ translation of the RNG function that spells out a 32-bit

multiplication on a 32-bit CPU using 16-bit instructions, we reach the final

pieces of ASM code for the 8-bit atan2() and trapezoid

rendering. These could actually pass for well-written ASM code in how they

express their 64-bit calculations: atan8() prepares its 64-bit

dividend in the combined EDX and EAX registers in

a way that isn't obvious at all from a cursory look at the code, and the

trapezoid functions effectively use Q32.32 subpixels. C++ allows us to

cleanly model all these calculations with 64-bit variables, but

unfortunately compiles the divisions into a call to a comparatively much

more bloated 64-bit/64-bit-division polyfill function. So yeah, we've

actually found a well-optimized piece of inline assembly that even Visual

Studio 2022's optimizer can't compete with. But then again, this is all

about code generation details that are specific to 32-bit code, and it

wouldn't be surprising if that part of the optimizer isn't getting much

attention anymore. Whether that optimization was useful, on the other hand…

Oh well, the new C++ version will be much more efficient in 64-bit builds.

And with that, there's no more ASM code left in Shuusou Gyoku's codebase,

and the original DirectXUTYs directory is slowly getting

emptier and emptier.

Phew! Was that everything for this delivery? I think that was everything. Here's the new build, which checks off 7 of the 15 remaining portability boxes:

Next up: Taking a well-earned break from Shuusou Gyoku and starting with the preparations for multilingual PC-98 Touhou translatability by looking at TH04's and TH05's in-game dialog system, and definitely writing a shorter blog post about all that…