- 📝 Posted:

- 🚚 Summary of:

- P0246, P0247, P0248, P0249, P0250, P0251

- ⌨ Commits:

- (Seihou)

P0226...152ad74, (Seihou)152ad74...54c3c4e, (Seihou)54c3c4e...62ff407, (Seihou)62ff407...a1f80a3, (Seihou)a1f80a3...629ddd8, (Seihou)629ddd8...P0251 - 💰 Funded by:

- Ember2528, Arandui, alp-bib

- 🏷 Tags:

And then I'm even late by yet another two days… For some reason, preparing

Shuusou Gyoku for an OpenGL port has been the most difficult and drawn-out

task I've worked on so far throughout this project. These pushes were in

development since April, and over two months in total. Tackling a legacy

codebase with such a rather vague goal while simultaneously wanting to keep

everything running did not do me any favors, and it was pretty hard to

resist the urge to fix everything that had better be fixed to make

this game portable…

📝 2022 ended with Shuusou Gyoku working at full speed on Windows ≥8 by itself, without external tools, for the first

time. However, since it all came down to just one small bugfix, the

resulting build still had several issues:

- The game might still start in the slow,

mitigated

8-bit or 16-bit mode if the respective app compatibility flag is still present in the registry from the earlier 📝 P0217 build. A player would then have to manually put the game into 32-bit mode via the Option menu to make it run at its actual intended speed. Bypassing this flag programmatically would require some rather fiddly .EXE patching techniques. (#33) - The 32-bit mode tends to lag significantly if a lot of sprites are onscreen, for example when canceling the final pattern of the Extra Stage midboss. (#35)

- If the game window lost and regained focus during the ending (for example via Alt-Tabbing), the game reloads the wrong sprite sheet. (#19)

- And, of course, we still have no native windowed mode, or support for rendering in the higher resolutions you'd want to use on modern high-DPI displays. (#7)

Now, we could tackle all of these issues one by one, in focused pushes… or wait for one hero to fund a full-on OpenGL backend as part of the larger goal of porting this game to Linux. This would take much longer, but fix all these issues at once while bringing us significantly closer to Shuusou Gyoku being cross-platform. Which is exactly what Ember2528 did.

Shuusou Gyoku is a very Windows-native codebase. Its usage of types

declared in <windows.h> even extends to core gameplay

code, the rendering code is completely architected around DirectDraw's

features and drawbacks, and text rendering is not abstracted at all. Looks

like it's now my task to write all the abstractions that pbg didn't manage

to write…

Therefore, I chose to stay with DirectDraw for a few more pushes while I

would build these abstractions. In hindsight, this was the least efficient

approach one could possibly imagine for the exact goal of porting the game

to Linux. Suddenly, I had to understand all this DirectDraw and GDI

jank, just to keep the game running at every step along the way. Retaining

Shuusou Gyoku's 8-bit mode in particular was a huge pain, but I didn't want

to remove it because it's currently the only way I can easily debug the game

in windowed mode at a scaled resolution, through DxWnd. In 16-bit or

32-bit mode, DxWnd slows down to a crawl, roughly resembling the performance

drop we used to get with Windows' own compatibility mitigations for the

original build.

The upside, though, is that everything I've built so far still works with

the original 8-bit and 16-bit graphics modes. And with just one compiler flag to disable

any modern x86 instructions, my build can still run on i586/P5 Pentium

CPUs, and only requires KernelEx and its latest

Kstub822 patches to run on Windows 98. And, surprisingly, my core

audience does appreciate this fact. Thus, I will include an i586 build

in all of my upcoming Shuusou Gyoku releases from now on. Once this codebase

can compile into a 64-bit binary (which will obviously be required for a

native Linux build), the i586 build will remain the only 32-bit Windows

build I'll include in my releases.

So, what was DirectDraw? In the shortest way that still describes it

accurately from the point of view of a developer: "A hardware acceleration

layer over Ye Olde Win32 GDI, providing double-buffering and fast blitting

of rectangles." There's the primary double-buffered framebuffer

surface, the offscreen surfaces that you create (which are

comparable to what 3D rendering APIs would call textures), and you

can blit rectangular regions between the two. That's it. Except for

double-buffering, DirectDraw offers no feature that GDI wouldn't also

support, while not covering some of GDI's more complex features. I mean,

DirectDraw can blit rectangles only? How

lame. ![]()

However, DirectDraw's relative lack of features is not as much of a problem

as it might appear at first. The reason for that lies in what I consider to

be DirectDraw's actual killer feature: compatibility with GDI's device

context (DC) abstraction. By acquiring a DC for a DirectDraw surface,

you can use all existing GDI functions to draw onto the surface, and, in

general, it will all just work. 😮 Most notably, you can use GDI's blitting

functions (i.e., BitBlt() and friends) to transfer pixel data

from a GDI HBITMAP in system memory onto a DirectDraw surface

in video memory, which is the easiest and most straightforward way to, well,

get sprite data onto a DirectDraw surface in the first place.

In theory, you could do that without ever touching GDI by locking the

surface memory and writing the raw bytes yourself. But in practice, you

probably won't, because your game has to run under multiple bit depths and

your data files typically only store one copy of all your sprites in a

single bit depth. And the necessary conversion and palette color matching…

is a mere implementation detail of GDI's blitting functions, using a

supposedly optimized code path for every permutation of source and

destination bit depths.

All in all, DirectDraw doesn't look too bad so far, does it? Fast blitting,

and you can still use the full wealth of GDI functions whenever needed… at

the small cost of potentially losing your surface memory at any time. 🙄

Yup, if a DirectDraw game runs in true resolution-changing fullscreen mode

and you switch to the Windows desktop, all your surface memory is freed and

you have to manually restore it once the game regains focus, followed by

manually copying all intended bitmap data back onto all surfaces. DirectDraw

is where this concept of surface loss originated, which later carried over

to the earlier versions of Direct3D and, infamously,

Direct2D as well.

Looking at it from the point of view of the mid-90s, it does make sense to

let the application handle trashed video memory if that's an unfortunate

reality that your graphics API implementation has to deal with. You don't

want to retain a second copy of each surface in a less volatile part of

memory because you didn't have that much of it. Instead, the application can

now choose the most appropriate way to restore each individual surface. For

procedurally generated surfaces, it could just re-run the generating code,

whereas all the fixed sprite sheets could be reloaded from disk.

In practice though, this well-intentioned freedom turns into a huge pain.

Suddenly, it's no longer enough to load every sprite sheet once before it's

needed, blit its pixel data onto the DirectDraw surface, and forget about

it. Now, the renderer must also be able to refresh the pixel data of every

surface from within itself whenever any of DirectDraw's blitting

functions fails with a DDERR_SURFACELOST error. This fact alone

is enough to push your renderer interface towards central management and

allocation of surfaces. You could maybe avoid the conceptual

SurfaceManager by bundling each surface with a regeneration

callback, but why should you? Any other graphics API would work with

straight-line procedural load-and-forget initialization code, so why slice

that code into little parts just because of some DirectDraw quirk?

So if your surfaces can get trashed at any time, and you already use

GDI to copy them from system memory to DirectDraw-managed video memory,

and your game features at least one procedurally generated surface…

you might as well retain every currently loaded surface in the form of an

additional GDI device-independent bitmap. 🤷 In fact, that's even better

than what Shuusou Gyoku did originally: For all .BMP-sourced surfaces, it

only kept a buffer of the entire decompressed .BMP file data, which means

that it had to recreate said intermediate GDI bitmap every time it needed to

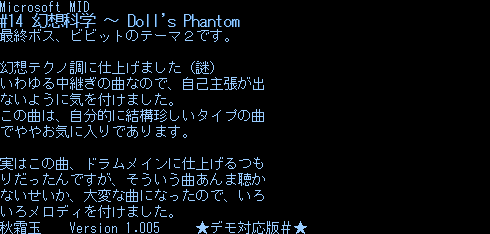

restore a surface. The in-game music title was originally restored

via regeneration callback that re-rendered the intended title directly onto

the DirectDraw surface, but this was handled by an additional "restore hook"

system that remained unused for anything else.

Anything more involved would be a micro-optimization, especially since the

goal is to get away from DirectDraw here. Not much point in "neatly"

reloading sprite surfaces from disk if the total size of all loaded sprite

sheets barely exceeds the 1 MiB mark. Also, keeping these GDI DIBs loaded

and initialized does speed up getting back into the game… in theory,

at least. After all, the game still runs in fullscreen mode, and resolution

switching already takes longer on modern flat-panel displays than any

surface restoration method we could come up with.

![]()

So that was all pretty annoying. But once we start rendering in 8-bit mode,

it gets even worse as we suddenly have to bother with palette management.

Similar to PC-98 Touhou, Shuusou Gyoku

uses way too many different palettes. In fact, it creates

a separate DirectDraw palette to retain the palette embedded into every

loaded .BMP file, and simply sets the palette of the primary surface and the

backbuffer to the one it loaded last. Like, why would you retain

per-surface palettes, and what effect does this even have? What even happens

when you blit between two DirectDraw surfaces that have different palettes?

Might this be the cause of the discolored in-game music title when playing

under DxWnd? 😵

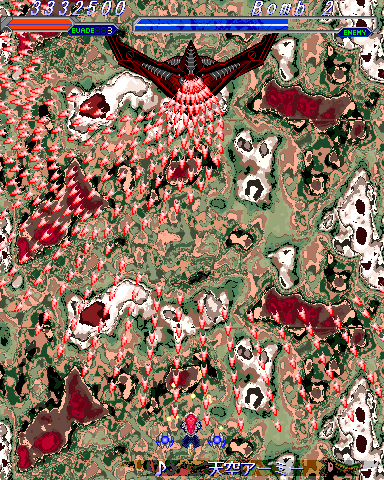

But if we try throwing out those extra palettes, it

only takes until Stage 3 for us to be greeted with… the infamous golf

course:

As you might have guessed, these exact colors come from Gates' face sprite,

whose palette apparently doesn't match the sprite sheets used in Stage 3.

Turns out that 256 colors are not enough for what Shuusou Gyoku would like

to use across the entire stage. In sprite loading order:

As you might have guessed, these exact colors come from Gates' face sprite,

whose palette apparently doesn't match the sprite sheets used in Stage 3.

Turns out that 256 colors are not enough for what Shuusou Gyoku would like

to use across the entire stage. In sprite loading order:

| Sprite sheet | GRAPH.DAT file |

Additional unique colors | Total unique colors |

|---|---|---|---|

| General system sprites | #0 | +96 | 96 |

| Stage 3 enemies | #3 | +42 | 138 |

| Stage 3 map tiles | #9 | +40 | 178 |

| Wide Shot bomb cut-in | #26 | +3 | 181 |

| VIVIT's faceset | #13 | +40 | 221 |

| Unknown face | #14 | +35 | 256 |

| Gates' faceset | #17 | +40 | 296 |

And that's why Shuusou Gyoku does not only have to retain these palettes, but also contains stage script commands (!) to switch the current palette back to either the map or enemy one, after the dialog system enforced the face palette.

But the worst aspects about palettes rear their ugly head at the boundary between GDI and DirectDraw, when GDI adds its own palettes into the mix. None of the following points are clearly documented in either ancient or current MSDN, forcing each new DirectDraw developer to figure them out on their own:

- When calling

IDirectDraw::CreateSurface()in 8-bit mode, DirectDraw automatically sets up the newly created surface with a reference (not a copy!) to the palette that's currently assigned to the primary surface. - When locking an 8-bit surface for GDI blitting via

IDirectDrawSurface::GetDC(), DirectDraw is supposed to set the GDI palette of the returned DC to the current palette of the DirectDraw… primary surface?! Not the surface you're actually callingGetDC()on?!

Interestingly, it took until March of this year for DxWnd to discover a different game that relied on this detail, while DDrawCompat had implemented it for years. DxWnd version 2.05.95 then introduced the DirectX(2) → Fix DC palette tweak, and it's this option that would fix the colors of the in-game music title on any Shuusou Gyoku build older than P0251. - Make sure to never

BitBlt()from a 24-bit RGB GDI image to a palettized 8-bit DirectDraw offscreen surface. You might be tempted to just go 24-bit because there's no palette to worry about and you can retain a single GDI image for every supported bit depth, but the resulting palette mapping glitches will be much worse than if you just stayed in 8-bit. If you want to procedurally generate a GDI bitmap for a DirectDraw surface, for example if you need to render text, just create a bitmap that's compatible with the DC of DirectDraw's primary or backbuffer surface. Doing that magically removes all palette woes, andCreateCompatibleBitmap()is much easier to call anyway.

Ultimately, all of this is why Shuusou Gyoku's original DirectDraw backend

looks the way it does. It might seem redundant and inefficient in places,

but pbg did in fact discover the only way where all the undocumented GDI and

DirectDraw color mapping internals come together to make the game look as

intended. 🧑🔬

And what else are you going to do if you want to target old hardware? My

PC-9821Nw133, for example, can only run the original Shuusou Gyoku in 8-bit

mode. For a Windows game on such old hardware, 8-bit DirectDraw looks like

the only viable option. You certainly don't want to use GDI alone, because

that's probably slow and you'd have to worry about even more palette-related

issues. Although people have reported that Shuusou Gyoku does actually

run faster on their old Windows 9x machine if they disable DirectDraw

acceleration…?

In that case, it might be worth a try to write a completely new 8-bit

software renderer, employing the same retained VRAM techniques that the

PC-98 Touhou games used to implement their scrolling playfields with a

minimum of redraws. The hardware scrolling feature of the PC-98 GDC would

then be replicated by blitting the playfield in two halves every frame. I

wonder how fast that would be…

Or you go straight back to DOS, and bring your own font renderer and

MIDI/PCM sound driver. ![]()

So why did we have to learn about all this? Well, if GDI functions can

directly render onto any kind of DirectDraw surface, this also includes text

rendering functions like TextOut() and DrawText().

If you're really lazy, you can even render your text directly onto

the DirectDraw backbuffer, which probably re-rasterizes all glyphs

every frame!

Which, you guessed it, is exactly how Shuusou Gyoku renders most of its

text. 🐷 Granted, it's not too bad with MS Gothic thanks to its embedded

bitmaps for font

heights between 7 and 22 inclusive, which replace the usual Bézier curve

rasterization for TrueType fonts with a rather quick bitmap lookup. However,

it would not only become a hypothetical problem if future translations end

up choosing more complex fonts without embedded bitmaps, but also as soon as

we port the game to other systems. Nobody in their right mind would

integrate a cross-platform font renderer directly with a 3D graphics API… right?

![]()

Instead, let's refactor the game to render all its existing text to and from

a bitmap,

extending the way the in-game music title is rendered to the rest of the

game. Conceptually, this is also how the Windows Touhou games have always

rendered their text. Since they've always used Direct3D, they've always had

to blit GDI's output onto a texture. Through the definitions in

text.anm, this fixed-size texture is then turned into a sprite

sheet, allowing every rendered line of text to be individually placed on the

screen and animated.

However, the static nature of both the sprite sheet and the texture caused

its fair share of problems for thcrap's translation support. Some of the

sprites, particularly the ones for spell card titles, don't originally take

up the entire width of the playfield, cutting off translations long before

they reach the left edge. Consequently, thcrap's base patch

for the Windows Touhou games has to resize the respective sprites to

make translators happy. Before I added .ANM header

patching in late 2018, this had to be done through a complete modified

copy of text.anm for every game – with possibly additional

variants if ZUN changed the layout of this file between game versions. Not

to mention that it's bound to be quite annoying to manually allocate a

rectangle for every line of text we want to show. After all, I have at least two text-heavy future

features in mind already…

So let's not do exactly that. Since DirectDraw wants us to manage all

surfaces in a central place, we keep the idea of using a single surface for

all text. But instead of predefining anything about the surface layout, we

fully build up the surface at runtime based on whatever rectangles we need,

using a rectangle

packing algorithm… yup, I wouldn't have expected to enter such territory

either. For now, we still hardcode a fixed size that each piece of text is

allowed to maximally take up. But once we get translations, nothing is

stopping us from dynamically extending this size to fit even longer strings,

and fitting them onto the fixed screen space via smooth scrolling.

To prevent the surface from arbitrarily growing as the game wants to render

more and more text, we also reset all allocated rectangles whenever the game

state changes. In turn, this will also recreate the text surface to match

the new bounding box of all rectangles before the first prerendering call

with the new layout. And if you remember the first bullet point about

DirectDraw palettes in 8-bit mode, this also means that the text surface

automatically receives the current palette of the primary surface, giving

us correct colors even without requiring DxWnd's DC palette tweak. 🎨

In fact, the need to dynamically create surfaces at custom sizes was the main reason why I had to look into DirectDraw surface management to begin with. The original game created all of its surfaces at once, at startup or after changing the bit depth in the main menu, which was a bad idea for many reasons:

- It hardcoded and limited the size of all sprite sheets,

- added another rendering-API-specific function that game code should not need to worry about,

- introduced surface IDs that have to be synchronized with the surface pointers used throughout the rest of the game,

- and was the main reason why the game had to distribute the six 320×240 ending pictures across two of the fixed 640×480 surfaces, which ended up causing the sprite reload bug in the ending. As implied in the issue, this was a DirectDraw bug that pretty much had to fix itself before I could port the game to OpenGL, and was the only bug where this was the case. Check the issue comments for more details about this specific bug.

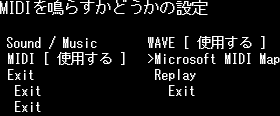

In the end, we get four different layouts for the text surface: One for the main menu, the Music Room, the in-game portion, and the ending. With, perhaps surprisingly, not too much text on either of them:

For the menus, the resulting packed layout reveals how I'm assigning a separately cached rectangle to each possible option – otherwise, they couldn't be arranged vertically on screen with this bitmap layout. Right now, I'm only storing all text for the current menu level, which requires text to be rendered again when entering or leaving submenus. However, I'm allocating as many rectangles as required for the submenu with the most amount of items to at least prevent the single text surface from being resized while navigating through the menu. As a side effect, this is also why you can see multiple

Exit labels: These simply come from

other submenus with more elements than the currently visited Sound /

Music one.

Still, we're re-rasterizing whole lines of text exactly as they appear on screen, and are even doing so multiple times to apply any drop shadows. Isn't that exactly what every text rendering tutorial nowadays advises against doing? Why not directly go for the classic solution to this problem and render using a font texture atlas? Well…

- Most of the game text is still in Japanese. If we were to build a font

atlas in advance, we'd have to add a separate build step that collects all

needed codepoints by parsing all text the game would ever print, adding a

build-time dependency on the original game's copyrighted data files. We'd

also have to move all hardcoded strings to a separate file since we surely

don't want to parse C++ manually during said build step. Theoretically, we

would then also give up the idea of modding text at run-time without

re-running that build step, since we'd restrict all text to the glyphs we've

rasterized in the atlas… yeah, that's more than enough reasons for static

atlas generation to be a non-starter.

OK, then let's build the atlas dynamically, adding new glyphs as we encounter them. Since this game is old, we can even be a bit lazy as far as the packing is concerned, and don't have to get as fancy as the GIF in the link above. Just assume a fixed height for each glyph, and fill the atlas from left to right. We can even clear it periodically to keep it from getting too big, like before entering the Music Room, the in-game portion, or the ending, or after switching languages once we have translations. Should work, right? - Except that most text in Shuusou Gyoku comes with a shadow, realized by first drawing the same string in a darker color and displaced by a few pixels. With a 3D renderer, none of this would be an issue because we can define vertex colors. But we're still using DirectDraw, which has no way of applying any sort of color formula – again, all it can do is take a rectangle and blit it somewhere else. So we can't just keep one atlas with white glyphs and let the renderer recolor it. Extending Shuusou Gyoku's Direct3D code with support for textured quads is also out of the question because then we wouldn't have any text in the Direct3D-less 8-bit mode. So what do we do instead? Throw the atlas away on every color change? Keep multiple atlases for every color we've seen so far? Turn shadows into a high-level concept? Outright forgetting the idea seems to be the best choice here…

- For a rather square language like Japanese where one Shift-JIS codepoint always corresponds to one glyph, a texture atlas can work fine and without too much effort. But once we support languages with more complex ligatures, we suddenly need to get a shaping engine from somewhere, and directly interact with it from our rendering code. This necessarily involves changing APIs and maybe even bundling the first cross-platform libraries, which I wanted to avoid in an already packed and long overdue delivery such as this one. If we continue to render line-by-line, translations would only need a line break algorithm.

- Most importantly though: It's not going to matter anyway. The

game ran fine on early 2000s hardware even though it called

TextOut()every frame, and any approach that caches the result of this call is going to be faster.

While the Music Room and the ending can be easily migrated to a prerendering

system, it's much harder for the main menu. Technically, all option

strings of the currently active submenu are rewritten every frame, even

though that would only be necessary for the scrolling MIDI device name in

the Sound / Music submenu. And since all this rewriting is done

via a classic sprintf() on fixed-size char

buffers, we'd have to deploy our own change detection before prerendering

can have any performance difference.

In essence, we'd be shifting the text rendering paradigm from the original

immediate approach to a more retained one. If you've ever used any of the

hot new immediate-mode GUI or web frameworks that have become popular over

the last 10 years, your alarm bells are probably already ringing by now.

Adding retained elements is always a step back in terms of code quality, as

it increases complexity by storing UI state in a second place.

Wouldn't it be better if we could just stay with the original immediate

approach then? Absolutely, and we only need a simple cache system to get

there. By remembering the string that was last rendered to every registered

rectangle, the text renderer can offer an immediate API that combines the

distinct Prerender() and Blit() steps into a

single Render() call. There still has to be an initialization

point that registers all rectangles for each game state (which,

surprisingly, was not present for the in-game portion in the original code),

but the rendering code remains architecturally unchanged in how we call the

text renderer every frame. As long as the text doesn't change, the text

renderer just blits whatever it previously rendered to the respective

rectangle. With an API like this, the whole pre-rendering part turns into a

mere implementation detail.

So, how much faster is the result? Since I can only measure non-VSynced performance in a quite rudimentary way using DxWnd's FPS counter, it highly depends on the selected renderer. Weirdly enough, even just switching font creation to the Unicode APIs tripled the FPS inside the Music Room when rendering with OpenGL? That said, the primary surface renderer seems to yield the most realistic numbers, as we still stay entirely within DirectDraw and perform no API wrapping. Using this renderer, I get speedups of roughly:

- ~3.5× in the Music Room,

- ~1.9× during in-game dialog, and

- ~1.5× in the main menu.

Not bad for something I had to do anyway to port the game away from DirectDraw! Shuusou Gyoku is rather infamous among the vintage computer scene for being ridiculously unoptimized, so I should definitely be able to get some performance gains out of the in-game portion as well.

For a final test of all the new blitting code, I also tried running outside DxWnd to verify everything against real and unpatched DirectDraw. Amusingly, this revealed how blitting from the new text surface seems to reach the color mapping limits of the DWM mitigation in 8-bit mode:

#FFFFFF text

color to #E4E3BB in the main menu?

8-bit mode does render correctly when I ran the same build in a Windows 98 VirtualBox on the same system though, so it's not worth looking into a mode that the system reports as unsupported to begin with. Let's leave this as somewhat of a visual reminder for players to select 32-bit mode instead.

Alright, enough about the annoying parts of GDI and DirectDraw for now.

Let's stop looking back and start looking forward, to a time within this

Seihou revolution when we're going to have lots of new options in the main

menu. Due to the nature of delivering individual pushes, we can expect lots

of revisions to the config file format. Therefore, we'd like to have a

backward-compatible system that allows players to upgrade from any older

build, including the original 秋霜玉.exe, to a newer one. The

original game predominantly used single-byte values for all its options, but

we'd like our system to work with variables of any size, including strings

to store things like the

name of the selected MIDI device in a more robust way. Also, it's pure

evil to reset the entire configuration just because someone tried to

hex-edit the config file and didn't keep the checksum in mind.

It didn't take long for me to arrive at a common

Size()/Read()/Write() interface. By

using the same interface for both arrays and individual values, new config

file versions can naturally expand older ones by taking the array of option

references from the previous version and wrapping it into a new array,

together with the new options.

The classic way of implementing this in C++ involves a typical

object-oriented class hierarchy: An Option base class would

define the interface in the form of virtual abstract functions, and the

Value, Array, and ConfigVersion

subclasses would provide different implementations. This works, but

introduces quite a bit of boilerplate, not to mention the runtime bloat from

all the virtual functions which Visual C++ can't inline. Why should we do

any runtime dispatch here? We know the set of configuration options

at compile time, after all… ![]()

Let's try looking into the modern C++ toolbox and see if we can do better.

The only real challenge here is that the array type has to support

arbitrarily sized option value types, which sounds like a job for

template parameter packs. If we save these into a

std::tuple, we can then "iterate" over all options with std::apply

and fold

expressions, in a nice functional style.

I was amazed by just how clearly the "crazy" modern C++ approach with

template parameter packs, std::apply() over giant

std::tuples, and fold expressions beats a classic polymorphic

hierarchy of abstract virtual functions. With the interface moved into an

even optional concept, the class hierarchy can be completely

flattened, which surprisingly also makes the code easier to both read and

write.

Here's how the new system works from the player's point of view:

- The config files now use a kanji-less and explicitly forward-compatible

naming scheme, starting with

SSG_V00.CFGin the P0251 build. The format of this initial version simply includes all values from the original秋霜CFG.DATwithout padding bytes or a checksum. Once we release a new build that adds new config options, we go up toSSG_V01.CFG, and so on. - When loading, the game starts at its newest supported config file

version. If that file doesn't exist, the game retries with each older

version in succession until it reaches the last file in the chain, which is

always the original

秋霜CFG.DAT. This makes it possible to upgrade from any older Shuusou Gyoku build to a newer one while retaining all your settings – including, most importantly, which shot types you unlocked the Extra Stage with. The newly introduced settings will simply remain at their initial default in this case. - When saving, the game always writes all versions it knows about,

down to and including the original

秋霜CFG.DAT, in the respective version-specific format. This means that you can change options in a newer build and they'll show up changed in older builds as well if they were supported there.

And yes, this also means that we can stop writing the unsupported 32-bit bit depth setting to秋霜CFG.DAT, which would cause a validation failure on the original build. This is now avoided by simply turning 32-bit into 16-bit just for the configuration that gets saved to this file. And speaking of validation failures… - The

SSG_V*.CFGfiles don't use checksums at all, which allows you to freely hex-edit them. Each configuration value is now validated individually, and reset to its default if you hex-edited it to something invalid. In the future, we could even show an in-engine window at startup that lists these invalid options and the defaults they were reset to, if we get backer support for this idea.- This per-value validation is also done if my builds loaded the

original

秋霜CFG.DAT. The checksum is still written for compatibility with the original build, but my builds ignore it.

- This per-value validation is also done if my builds loaded the

original

With that, we've got more than enough code for a new build:

This build also contains two more fixes that didn't fit into the big DirectDraw or configuration categories:

- The P0226 build had a bug that allowed invalid stages to be selected for

replay recording. If the

ReplaySaveoption was[O F F], pressing the ⬅️ left arrow key on theStageSelectoption would overflow its value to 255. The effects of this weren't all too serious: The game would simply stay on the Weapon Select screen for an invalid stage number, or launch into the Extra Stage if you scrolled all the way to 131. Still, it's fixed in this build.

Whoops! That one was fully my fault. - The render time for the in-game music title is now roughly cut in half:

Achieved by simply trimming trailing whitespace and using slightly more efficient GDI functions to draw the gradient. Spending 4 frames on rendering a gradient is still way too much though. I'll optimize that further once I actually get to port this effect away from GDI.

These videos also show off how DxWnd's DC palette bug affected the original game, and how it doesn't affect the P0251 build.

These 6 pushes still left several of Shuusou Gyoku's DirectDraw portability issues unsolved, but I'd better look at them once I've set up a basic OpenGL skeleton to avoid any more premature abstraction. Since the ultimate goal is a Linux port, I might as well already start looking at the current best platform layer libraries. SDL would be the standard choice here, and while SDL_ttf looks regrettably misdesigned, the core SDL library seems to cover all we could possibly want for Shuusou Gyoku, including a 2D renderer… wait, what?!

Yup. Admittedly, I've been living under a rock as far as SDL is concerned, and thus wasn't aware that SDL 2 introduced its own abstraction for 2D rendering that just happens to almost exactly cover everything we need for Shuusou Gyoku. This API even covers all of the game's Direct3D code, which only draws alpha-blended, untextured, and pre-transformed vertex-colored triangles and lines. It's the exact abstraction over OpenGL I thought I had to write myself, and such a perfect match for this game that it would be foolish to go for a custom OpenGL backend – especially since SDL will automatically target the ideal graphics API for any given operating system.

Sadly, the one thing SDL_Renderer is missing is something equivalent to

pixel shaders, which we would need to replicate the 西方

Project lens ball effect shown at startup. Looks like we have

to drop into a

completely separate, unaccelerated rendering mode and continue to

software-render this one effect before switching to hardware-accelerated

rendering for the rest of the game. But at least we can do that in a

cross-platform way, and don't have to bother with shading languages –

or, perhaps even worse, SDL's own shading

language.

If we were extremely pedantic, we'd also have to do the same for the

📝 unused spiral effect that was originally intended for the staff roll.

Software rendering would be even more annoying there, since we don't

just have to software-render these staff sprites, but also the ending

picture and text, complete with their respective fade effects. And while I

typically do go the extra mile to preserve whatever code was present in

these games, keeping this effect would just needlessly drive up the

cost of the SDL backend. Let's just move this one to the museum of unused

code and no longer actively compile it. RIP spiral 🥲 At least you're

still preserved in lossless video form.

Now that SDL has become an integral part of Shuusou Gyoku's portability plan rather than just being one potential platform layer among many, the optimal order of tasks has slightly changed. If we stayed within the raw Win32 API any longer than absolutely necessary, we'd only risk writing more Win32-native code for things like audio streaming that we'd then have to throw away and rewrite in SDL later. Next up, therefore: Staying with Shuusou Gyoku, but continuing in a much more focused manner by fixing the input system and starting the SDL migration with input and sound.